It's been one year since Sony revealed Project Morpheus, their VR headset for the PS4, with plans announced earlier this week for release next year. In that year VR has exploded, with a lot of money being funneled into VR research and development all over the place. At GDC this year, I tried five different VR headsets, each with its own proprietary hardware and software solutions for VR problems. Project Morpheus was not available to try without an appointment, but there was a talk about what Sony is doing to win the tech war for consumer VR.

Entitled "Beyond Immersion: Project Morpheus and Playstation," the one-hour talk featured three sections by three separate speakers: Chris Norden addressed hardware and software upgrades in the last year with the tech, Nicolas Doucet addressed Sony's proprietary demos and attempts at using game design to teach the players its limitations, and George Andreas discussed the dramatic and "transformative presence" (making it feel more real).

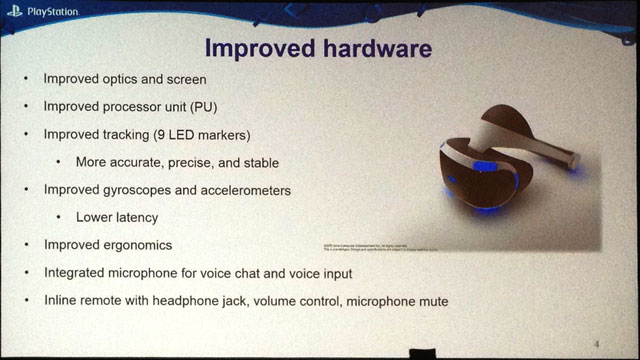

Chris Norden, Senior Staff Engineer at SCEA, primarily addressed how Sony had improved on the hardware in every possible way, and planned on continuing to do so moving forward. While early reports of Valve's HTC Vive VR headset have it being stronger than current competition, the VR arms race continues, and I don't doubt that by this time next year, the screen resolutions and other specs will have evened out once again, with Oculus Rift, Project Morpheus, and Valve's VR systems all sharing very similar specs.

Right now Sony's device is similar to the Oculus Rift's DK2 units: a 1920×1080 screen (or 960×1080 per eye, lower than Oculus' new Crescent Bay and Valve's Vive); a 100-degree field of view with the lenses set up to distribute more pixels to the center of the field of view, allowing them to widen it to about 100 degrees; and a 120Hz refresh rate, which he said the PS4 can produce, but only for the VR headset.

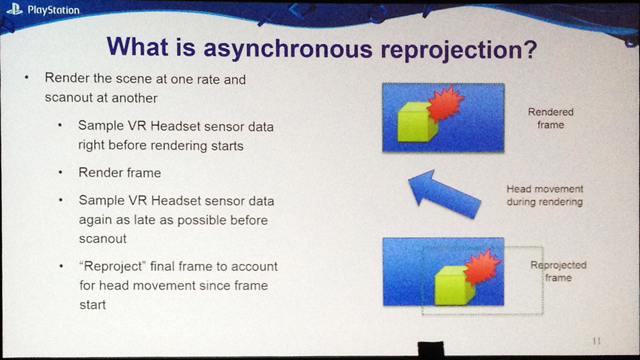

Norden stressed two things heavily in his portion of the talk—the need to render 120fps for VR to avoid "judder" (or what looks like weird visual jumps or skips), and the need for what he called "asynchronous repositioning" to avoid latency issues. Because the player's head will move during the minute fractions of a second it takes to render a frame, asynchronous repositioning (sometimes called "time warping") is a method of doing a calculation at the end of the render and shifting the rendered image in the amount of space offset by the movement of the head, which results in very low latency. Project Morpheus can even use this technique to "fake" 120fps motion from a 60fps source, though it starts to have artifacting problems when there is a lot of motion close to the player.

I'm sure a lot of that all sounds like technical mumbo-jumbo, so let's zoom in on what will matter to you. Most of these are known issues in the VR community and are being addressed by people developing for Oculus and other VR headsets as well. One indie developer told me that an advantage with Sony's hardware was that some of these housekeeping operations will be handled by Sony within their own hardware or firmware level; meaning the developers wouldn't necessarily have to write their own code to perform these complex operations like asynchronous repositioning, interpretation of the motion data for the headset, DualShock 4 controller, or PS Move peripherals. All that is handled by Project Morpheus itself.

In this way, Norden was absolutely right about Sony having an advantage over other VR developers in that every PS4/Project Morpheus gameplay experience will be functionally identical. This may seem like a detraction or giving players a kind of fast-food VR experience, but it actually potentially makes things easier on the developer end. Since developers have a benchmark for the limits of Sony's hardware, they won't have to worry about the limitations of different headsets (some of which have a much lower capability) or different computer processors that may or may not be able to reach the high-end benchmarks solid VR needs to feel immersive. They know exactly what the hardware and software specs are, and can develop within that framework. Considering the vastly different VR options out there on the PC market, VR development there has to be much more malleable to hit all the targets.

Additionally, of the independent VR titles I saw this year, every single one either confirmed or implied heavily that they were developing their games for Project Morpheus in addition to their PC builds. In most cases, they were simply waiting for their dev kits to show up before they started creating PS4 builds, and in some cases they had already created non-VR versions for Sony's hardware.

The other major problem associated with VR, as it stands today, is the relatively small area of space that a person can physically move while wearing a VR headset. Similar to the issues of using The Move or The Kinect, the range of what most VR can do within the environment is limited by the range of their tracking cameras (if they have one), but also the physical cord connecting them to the hardware.

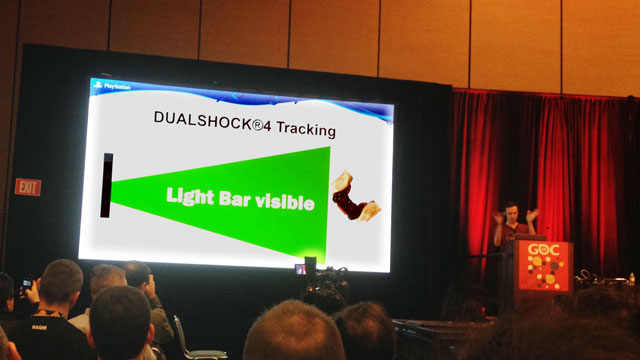

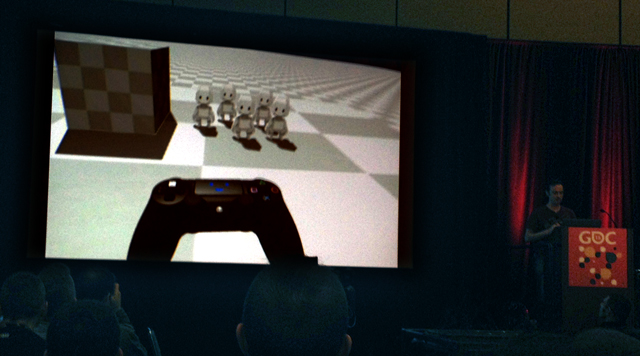

In order to solve this problem, Nicolas Doucet, Creative Director at SCE Japan (whose Team Asobi created the PS4 Move demo, The Playroom), has been developing demo solutions for what kind of gameplay experiences can be had using Project Morpheus. Many of these involved literally modeling the controller into the games and creating guides for where it should be placed or creating artificial guidelines outside of which it stops working. In some of these demos the controller turned into a spaceship in an Afterburner-style game controlled by moving it around, and in another it became the handlebars for a motorbike racing game. And if the controller was moved out of the camera range, the handlebars disconnected from the steering column.

While he offered other solutions, it quickly became clear just how narrow the limitations of the tech are in terms of movement in the space. It's no surprise that Doucet's demos all had the player facing one direction and not moving too much; movement in VR space in first person that doesn't correspond to actual body position can feel wrong and create vertigo. It's why a good number of games made for VR are flight-sims or third-person games. Tethered VR has unique opportunities for platform gaming, which were showed off amongst a host of demos featuring little robots that looked similar to Sackboy, where you can float as a godlike presence above the character you are playing and watch their actions play out directly below or to the side.

The final presentation by George Andreas, Creative Director of SCE London, was the oddest of the three. It was entirely about their demo, which almost no one in the room was going to be allowed to experience at GDC, and they didn't show any video from it. While it presented some ideas about immersion in a scene, it was not terribly informative. How Sony's development will change with the reveal of VR systems like the Vive remains to be seen, since the Vive uses motion-tracking devices on the outside of their headset to map the local area (rather than using an external camera to track the headset). giving the user a wider range of available options to move within a space.

Between Norden and Doucet, it was clear that Sony is investing a lot of time and money into Project Morpheus. Doucet mentioned that the demos we were shown videos of were often completed in a single week. Norden stressed that while another year for the tech might seem long, it was in the benefit of everyone in the VR community that they develop a product that was as good as possible before launching. It's clear that Sony expects their product to be the benchmark for a broad audience experience of virtual reality.